AR-HUD – Technology for a Dialog Without Words

- Optical system comprising two picture generating units with different reproduction processes complement each other in AR-HUD

- Complex process for graphics generation in the new AR-Creator

- eHorizon information forms basis for future driver information

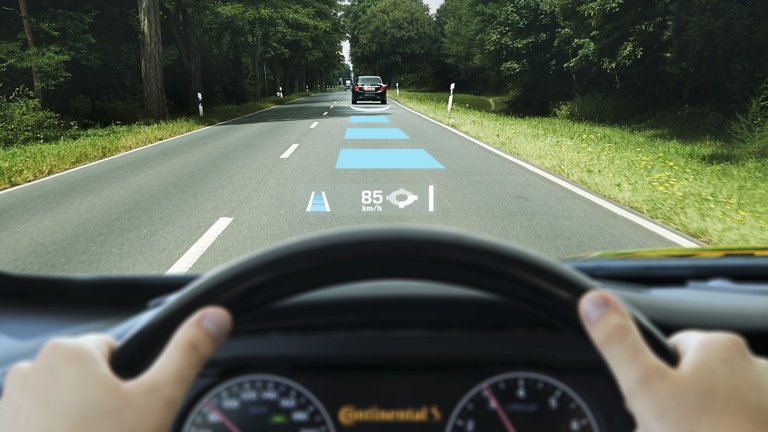

Babenhausen/Germany, July 21, 2014. The international automotive supplier Continental is developing an Augmented Reality Head-up Display (AR-HUD) in order to facilitate a new form of dialog between the vehicle and the driver. The reality of the traffic conditions as seen by the driver is extended (augmented) in this display system – graphic images placed precisely into the exterior view by means of optical projection embeds information directly into the traffic situation. This makes the AR-HUD one of the most innovative enhancements of the human machine interface (HMI) in vehicles.

This innovation is part of a long history at Continental. "We have provided driver information for over 110 years. Beginning with the speedometer, we have become one of the leading developers of display and operating solutions for a wide variety of vehicles," said Eelco Spoelder, head of the Continental Instrumentation & Driver HMI business unit. "HUD technology is today's equivalent of the eddy current speedometer in 1902 – a fundamental aid to the driver. This is particularly true for the AR-HUD."

The AR-HUD has now reached an advanced stage of pre-production development. The demo vehicle is used on one hand to show feasibility, and on the other, it provides valuable insights for series development. Continental is planning to achieve production readiness in 2017.

AR-HUD: seeing is understanding

"The AR-HUD optical system enables the driver to see an augmented display of the status of driver assistance systems and the significance of this information in their direct field of view," said Dr. Pablo Richter, a HUD technology expert in the Continental Interior division. "As a new part of the human machine interface, the current, pre-production AR-HUD is already closely connected with the environmental sensors of driver assistance systems, as well as GPS data, map material, and vehicle dynamics data."

Continental has implemented this new technology at an advanced development stage in a demo vehicle for the first time as an example. Radar sensors are integrated behind the front bumper and a CMOS mono-camera is integrated on the base of the rear view mirror. The Advanced Driver Assistance Systems (ADAS) selected as relevant for the first AR-HUD applications include Adaptive Cruise Control (ACC), route information from the navigation system, and Lane Departure Warning (LDW).

"If one of the driver assistance systems detects a relevant situation, virtual graphical information in the AR-HUD makes the driver aware of this," continued Richter. "In addition to the direct increase in safety, this form of dialog is also a key technology for automated driving. The augmentation makes it easier for the person driving to build up trust in the new driving functions."

Two picture levels with clearly different projection distances – based on two different picture generation units

In the new Continental AR-HUD, two picture levels are possible over different projection distances, also called the 'near' or 'status' level and the 'remote' or 'augmentation' level. The 'near' status level appears to move at the end of the engine hood in front of the driver and shows the driver selected status information such as the current speed, applicable distance restrictions like no passing zones and speed limits, or the current settings of the Adaptive Cruise Control (ACC). To be able to read this information, the driver only has to lower their eyes by about 6°. The status information appears in a field of vision of size 5°x 1° (corresponding to 210 mm x 42 mm) with a projection distance of 2.4 m. This corresponds to the virtual picture of a "conventional" head-up display, and is based on mirror optics and a picture generating unit (PGU). The latter is made up of a thin film transistor (TFT) display, the content of which is backlit using LEDs. This unit has been integrated in an extremely compact design in the upper section of the AR-HUD module. The mirror optics enlarge the content of the display for the virtual representation. This is achieved using a curved mirror. Continental uses a carefully chosen optical design to implement two picture levels over different projection distances in the AR-HUD. Here, the respective optical paths of both levels slightly overlap internally. The optical path of the near level only uses the upper edge zone of the large AR-HUD mirror (the large asphere) without another "folding mirror." This part of the AR-HUD system resembles the state of the art technology currently integrated by Continental as a second generation HUD in factory-built vehicles.

Augmentation with cinema technology in the car

"The augmentation level naturally plays the main role in the AR-HUD. It provides for the augmented representation of display symbols directly on the road at a projection distance of 7.5 m in front of the driver. The content is adapted to current traffic conditions," said Richter. The contents of this remote level are generated using a new picture generating unit that Continental first presented at the IAA 2013. The graphical elements are generated with a digital micromirror device (DMD) in the same manner as digital cinema projectors. The core of the PGU is an optical semiconductor with a matrix of several hundred thousand tiny mirrors, which can be tilted individually using electrostatic fields. The micromirror matrix is alternately lit by three colored LEDs (red, blue, and green) in quick succession and in a time-sequential manner. The collimation (parallel direction) of the three-color light takes place through a tilted mirror with a color-filter function ('dichroic mirror'). These particular mirrors either allow the light to pass through or reflect it, depending on the color. All micromirrors of this color are tilted synchronously with the color currently lit, so that they reflect the incoming light through a lens and thus depict this color on a subsequent focusing screen as individual pixels. This happens at the same time for all three colors. The human eye "averages" all three color pictures on the focusing screen and gives the impression of a fully colored picture.

Seen from the front side of the focusing screen, the subsequent optical path corresponds to that of a conventional HUD with the image reflected from the focusing screen onto the second, larger mirror (AR-HUD mirror) using a first mirror (folding mirror). From there, it is reflected onto the front screen. The emitting area of the optical system for the augmentation is nearly DIN A4 size. This results in a field of vision of size 10° x 4.8° in the augmentation level, which corresponds geometrically to an approximate width of the augmentable viewing area of 130 cm and a height of 63 cm in the direct field of vision. Information at this distance level can be read with the driver only having to slightly lower their eyes by 2.4°. The picture generating units of both the status and augmentation levels produce a display with a luminance adapted to the ambient lighting of over 10,000 candela per square meter. This means that the display is easy to read in nearly all ambient lighting conditions.

This system approach for an AR-HUD in Continental's test vehicle, with two picture levels over different projection distances, has a major advantage. In most traffic conditions, content can be implemented at the remote level and the near level concurrently. This allows all pertinent driving and status information to be shown within the direct view of the driver.

Data fusion and graphics generation in the AR-Creator

Numerous simulations and Continental subject tests demonstrated that drivers feel most comfortable when the augmentation begins approximately 18 m to 20 m in front of the vehicle and continues up to a distance of around 100 m, depending on the route. Peter Giegerich, who is responsible for developing the AR-Creator, explains how this graphical information is generated and positioned correctly: "The AR-Creator is an incredibly ambitious new development. The control unit has to evaluate a number of sensor data streams in order to place the graphical elements in the exact position on the focusing screen from where they can be reflected precisely into the driver's AR-HUD field of vision. So considerable arithmetic is required."

The AR-Creator undertakes a data fusion from three sources. The mono-camera provides the geometry of the road layout. "Euler spirals,"or mathematical descriptions of how the curvature changes in the lane in front of the vehicle, are also taken into consideration. The size, position, and distance of detectable objects in front of the vehicle are obtained from a combination of radar sensor data and interpretation of the camera data. Lastly, Continental's eHorizon provides the map frame, in which the data sensed on the spot is read. This eHorizon in the demo vehicle is static and only uses navigational data material. Continental is already working on the series production of networked and highly dynamic eHorizon products, enabling data from a wide variety of sources (i.e. Vehicle-2-Vehicle, traffic control centers, etc.) prepared for display in the AR-HUD. The vehicle's position on a digital map is shown using a fusion of vehicle dynamics, camera and GPS data.

The AR-Creator also uses the merged data to calculate how the geometric road layout in front of the driver looks from the driver's position. This is possible because the driver's eye position is known. The driver sets the correct position of the "eye box" once in the demo vehicle before starting to drive. This process can be automated with an interior camera in series production. It detects the driver's eye position and can track the positioning of the eye box. The term "eye box" denotes a rectangular area where the height and width correspond to a theoretical "viewing window." The driver only gets the complete AR-HUD picture while looking at the road through this window. The vehicle passengers do not see the content shown by HUD and AR-HUD.

"Based on the adjustable position of the eye box, the AR-Creator knows where the driver's eyes are, and from which perspective they are seeing the road and their surroundings, " explained Giegerich. “If an assistance system reports a relevant observation, corresponding virtual information can be created at the right point in the AR-HUD.”

Less is more!

“A lot of development work went into designing the virtual information,” said HMI developer Stephan Cieler. "After numerous design studies and subject tests, our motto for the AR-HUD was 'Less is more.'"

The initial idea to place a transparent color carpet over the lane was therefore quickly discarded. "We wanted to only show the driver the minimum graphical information necessary, so as not to cover the real traffic view," stated Cieler.

Angular arrowheads as navigation aids can optionally "lie" flat on the road, for example. In the case of a change of direction, they can move to be upright and turn toward the new direction of travel, so that they work like a direction sign. This design enables virtual information to be given in narrow curves, although a real augmentation in this situation is not possible due to the lack of perspective visual range.

The warning for crossing a traffic lane boundary is implemented discreetly. The traffic lane boundaries are only highlighted by the demo vehicle AR-HUD if the driver is most likely crossing them unintentionally.

If a future eHorizon receives information about an accident in advance, a danger symbol with a correspondingly high attention value can be placed in the driver's field of view well in advance.

"Once this new form of interaction with the driver has been installed, there are many options in the HMI to provide the driver with situational and proactive information. The vehicle and driver are then speaking to each other, even if this dialog takes place without words," said Richter.